Towards Robust Deep Hiding Under Non-Differentiable Distortions for Practical Blind Watermarking

Abstract

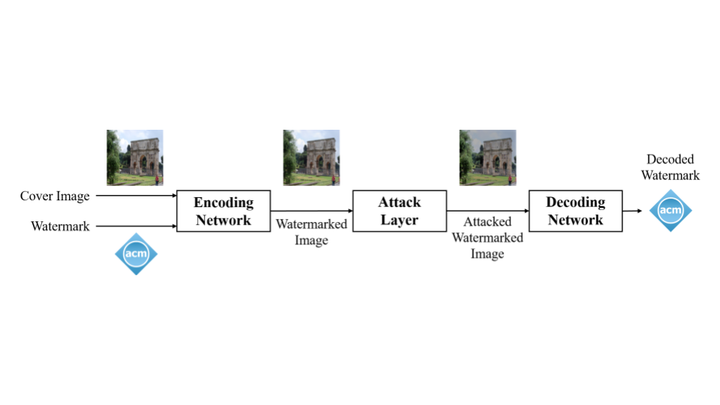

Data hiding is one widely used approach for proving ownership through blind watermarking. Deep learning has been widely used in data hiding, for which inserting an attack simulation layer (ASL) after the watermarked image has been widely recognized as the most effective approach for improving the pipeline robustness against distortions. Despite its wide usage, the gain of enhanced robustness from ASL is usually interpreted through the lens of augmentation, while our work explores this gain from a new perspective by disentangling the forward and backward propagation of such ASL. We find that the main influential component is forward propagation instead of backward propagation. This observation motivates us to use forward ASL to make the pipeline compatible with nondifferentiable and/or black-box distortion, such as lossy (JPEG) compression and photoshop effects. Extensive experiments demonstrate the efficacy of our simple approach.